In this article, we continue add functionality to our serverless EC2 inventory stack we created in part 1.

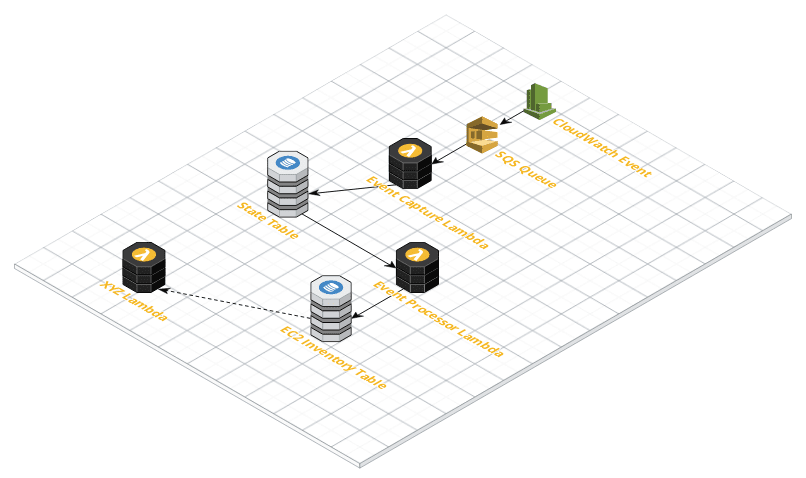

If we refer back to our architecture diagram, we will be creating the following components:

- State change SQS queue

- DynamoDB tables for state change and EC2 inventory

- IAM policies and roles

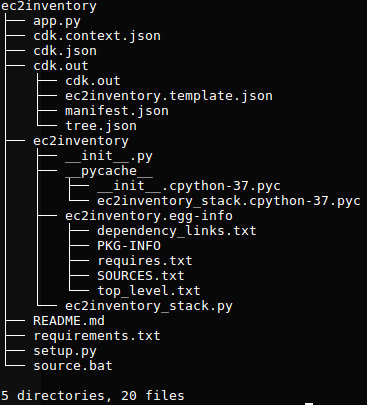

Before we dive into the details of how we are going to build those components, let’s take a look at our existing CDK project structure.

As you can see the AWS CDK created quite a few files and directories. There are two files which we will be working with a lot, they are:

- ec2invenory_stack.py – Our stack is defined here

- setup.py – Additional dependencies are defined here

We will start by adding dependencies to setup.py we will do this first so we don’t have to keep switching back to it later.

Edit setup.py using your favorite editor, I use vscode but any editor will work. In there you will see what the CDK created for us, look for the install_requires=[...] section.

install_requires=[

"aws-cdk.core==1.24.0",

],As you can see we only have the aws-cdk.core module there at the moment. Let’s add all the additional modules we’ll need for this project.

install_requires=[

"aws-cdk.core==1.24.0",

"aws-cdk.aws_iam",

"aws-cdk.aws_sqs",

"aws-cdk.aws_dynamodb",

"aws-cdk.aws_events",

"aws-cdk.aws_events_targets",

"aws-cdk.aws_lambda",

"aws-cdk.aws_lambda_event_sources",

]In order to use these additional modules we must install them in our local environment.

$ pip install -r requirements.txt

If you don’t add these packages to your setup.py and install the requirements you will get import errors.

$ cdk list

Traceback (most recent call last):

File "app.py", line 5, in <module>

from ec2inventory.ec2inventory_stack import Ec2InventoryStack

File "/home/odachi/letsfigureout/ec2inventory/ec2inventory/ec2inventory_stack.py", line 2, in <module>

from aws_cdk import Queue

ImportError: cannot import name 'Queue' from 'aws_cdk' (unknown location)

Subprocess exited with error 1

Now we are ready to start defining the rest of the stack.

State change SQS queue

Currently, we don’t have much defined in our ec2inventory_stack.py let’s start by defining a state change SQS queue.

We will start by updating our imports to bring in aws_sqs and the Queue call and the define our queue within our Ec2InventoryStack.

from aws_cdk import core

from aws_cdk.aws_sqs import Queue

class Ec2InventoryStack(core.Stack):

def __init__(self, scope: core.Construct, id: str, **kwargs) -> None:

super().__init__(scope, id, **kwargs)

# SQS queue

state_change_sqs = Queue(

self,

"state_change_sqs",

visibility_timeout=core.Duration.seconds(60)

We import the Queue class from the aws_sqs module and start our queue definition on line 11. In the definition I set a custom visibility_timeout for the Queue to 60 seconds. By default, SQS queues have a visibility timeout of 30 seconds.

DynamoDB tables

Again, we will update our imports this time we’ll be adding DynamoDB related imports.

from aws_cdk.aws_dynamodb import Table, Attribute, AttributeType, BillingMode, StreamViewType

Additionally, we define the two tables inside Ec2InventoryStack, the two tables differ slightly so I will explain in a little more detail what is happening.

# EC2 state changes

tb_states = Table(

self,

"ec2_states",

partition_key=Attribute(name="instance-id",

type=AttributeType.STRING),

sort_key=Attribute(

name="time",

type=AttributeType.STRING

),

billing_mode=BillingMode.PAY_PER_REQUEST,

removal_policy=core.RemovalPolicy.DESTROY,

stream=StreamViewType.NEW_IMAGE)

# EC2 inventory

tb_inventory = Table(

self,

"ec2_inventory",

partition_key=Attribute(name="instance-id",

type=AttributeType.STRING),

sort_key=Attribute(

name="time",

type=AttributeType.STRING

),

billing_mode=BillingMode.PAY_PER_REQUEST,

removal_policy=core.RemovalPolicy.DESTROY,

stream=StreamViewType.KEYS_ONLY)In both of the table definitions we are defining a partition key of instance-id and time. As you may be aware, EC2 instance id’s are globally unique so it’s a prime candidate for a partition key.

As we aren’t quite sure what our read and write capacity will be for either of these tables, I would recommend using on demand capacity or BillingMode.PAY_PER_REQUEST.

There are several factors to consider when choosing the required capacity for DynamoDB which I won’t go into here. If you want to learn more about provisioning capacity for DynamoDB article on Calculating WCU and RCU for Amazon DynamoDB.

In both tables I have defined the removal_policy as DESTROY, the default behavior is to keep the tables. As a result you can end up having a lot of additional tables laying around, which is not ideal especially when you’re developing.

Lastly, on the ec2_inventory table I have defined a stream, we will use this stream as an event source for our EC2 Inventory lambda.

IAM policies and roles

Continuing on, we’ll add IAM roles and policies. I often find that IAM policies require the most amount of rework. Due to there being lots of permissions and me wanting to only provide the bare minimum permission a role required in order to achieve it’s purpose.

Again, we will start with adding some additional imports.

from aws_cdk.aws_iam import (

Role,

Policy,

Effect,

PolicyStatement,

ServicePrincipal,

ManagedPolicy,

)Now for the policies, there are a couple of different ways these could have been defined. I decided to create them as a standalone policy and then attach them to a role. As a result it makes it easier to copy and paste into another a new project if needed.

# IAM Policies

pol_ec2_states_ro = ManagedPolicy(self, "pol_EC2StatesReadOnly",

statements=[

PolicyStatement(

effect=Effect.ALLOW,

actions=[

"dynamodb:DescribeStream",

"dynamodb:GetRecords",

"dynamodb:GetItem",

"dynamodb:GetShardIterator",

"dynamodb:ListStreams"

],

resources=[tb_states.table_arn]

)])

pol_ec2_states_rwd = ManagedPolicy(

self, "pol_EC2StatesWriteDelete",

statements=[

PolicyStatement(

effect=Effect.ALLOW,

actions=[

"dynamodb:DeleteItem",

"dynamodb:DescribeTable",

"dynamodb:PutItem",

"dynamodb:Query",

"dynamodb:UpdateItem"

],

resources=[tb_states.table_arn]

)])

pol_ec2_inventory_full = ManagedPolicy(

self, "pol_EC2InventoryFullAccess",

statements=[

PolicyStatement(

effect=Effect.ALLOW,

actions=[

"dynamodb:DeleteItem",

"dynamodb:DescribeTable",

"dynamodb:GetItem",

"dynamodb:PutItem",

"dynamodb:Query",

"dynamodb:UpdateItem"

],

resources=[tb_inventory.table_arn]

)])

pol_lambda_describe_ec2 = ManagedPolicy(

self, "pol_LambdaDescribeEC2",

statements=[

PolicyStatement(

effect=Effect.ALLOW,

actions=[

"ec2:Describe*"

],

resources=["*"]

)])

# IAM Roles

rl_event_capture = Role(

self,

'rl_state_capture',

assumed_by=ServicePrincipal('lambda.amazonaws.com'),

managed_policies=[

basic_exec,

sqs_access,

pol_ec2_states_rwd])

rl_event_processor = Role(

self,

'rl_state_processor',

assumed_by=ServicePrincipal('lambda.amazonaws.com'),

managed_policies=[

basic_exec,

pol_ec2_states_ro,

pol_ec2_states_rwd,

pol_ec2_inventory_full,

pol_lambda_describe_ec2])The policies look very similar to how you would write them in a CloudFormation template. I find the roles a lot simpler to define since we can just pass the policies in as a list of variables.

So with that we have our or SQS queue, Dynamodb tables and IAM roles and policies read for our lambda functions. In the next part we will glue this all together and deploy our complete stack.

In case you missed the first part, check out the first article in this series – A Serverless EC2 Inventory with the AWS CDK (part 1).

2 thoughts on “A Serverless EC2 Inventory with the AWS CDK (part 2)”

Comments are closed.